## loading python libraries

# necessary to display plots inline:

%matplotlib inline

# load the libraries

import matplotlib.pyplot as plt # 2D plotting library

import numpy as np # package for scientific computing

from math import * # package for mathematics (pi, arctan, sqrt, factorial ...)

AdjacencyMatrix=np.matrix([[0,1,0,0],[1,0,1,1],[1,0,0,0],[1,0,0,0]])

n=20

B=AdjacencyMatrix**n

print(str(B[3,3])+' paths of length '+str(n))

#print(B)

488 paths of length 20

# Matrices of b-short words

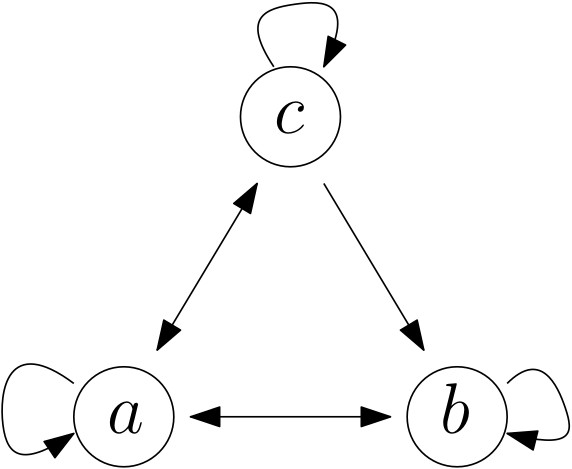

Adjacency_abc=np.matrix([[1,1,1],[1,1,0],[1,1,1]])

#print('Adjacency matrix : ')

#print(Adjacency_abc)

List=[]

for n in range(1,21):

Mat=Adjacency_abc**(n-1)

List.append(np.sum(Mat))

print('Number of words of size n=',n,': ',np.sum(Mat))

# Matrices of b-short words

Adjacency_bShort=np.matrix([[1,1,0,0],[1,0,1,0],[1,0,0,1],[1,0,0,0]])

#print('Adjacency matrix : ')

#print(Adjacency_bShort)

for n in range(1,21):

Mat=Adjacency_bShort**(n-1)

MatBis=Mat[0:2,]

print('For n = ',n,' there are ', np.sum(MatBis),' b-short words')

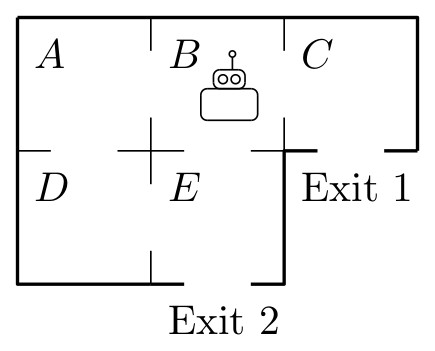

We consider a random robot in the following labyrinth:

A=np.matrix([

[0,0.5,0,0.5,0,0,0],

[1/3.0,0,1/3.0,0,1/3.0,0,0],

[0,0.5,0,0,0,0.5,0],

[1/2.0,0,0,0,1/2.0,0,0],

[0,1/3.0,0,1/3.0,0,0,1/3.0],

[0,0,0,0,0,1,0],

[0,0,0,0,0,0,1],

])

#print(np.round(A**80,3))

def ProbaDistribution(n):

Power=np.linalg.matrix_power(A,n)

return Power[1,:]

print('------- Question 1 -----')

print('Approximation of probability distributions:')

for n in [5,100]:

print('p(',n,') = ',str(np.round(ProbaDistribution(n),3)))

print('------- Question 2 -----')

for n in [100]:

Distribution=ProbaDistribution(n)

print('At time n=',n,', the robot is at Exit 1 with probab. '+str(np.round(Distribution[0,5],6)))

def ProbaNoEscape(n):

Distribution=ProbaDistribution(n)

return 1-Distribution[0,5]-Distribution[0,6] # returns 1-Proba(Exit 1)-Proba(Exit 2)

N=30

Proba=[ProbaNoEscape(n) for n in range(N)]

plt.plot(Proba,'o-')

plt.show()

A=np.array([[1,-1/2,0,-1/2,0],

[-1/3,1,-1/3,0,-1/3],

[0,-1/2,1,0,0],

[-1/2,0,0,1,-1/2],

[0,-1/3,0,-1/3,1]])

B=np.array([0,0,1/2,0,0])

print(np.linalg.solve(A, B))

A player plays the following game:

Here is an example with $T=12$: $$ 0 \stackrel{\text{dice = }3}{\longrightarrow} 3 \stackrel{\text{dice = }5}{\longrightarrow} 8\stackrel{\text{dice = }1}{\longrightarrow} 9\stackrel{\text{dice = }4}{\longrightarrow} 13\ \text{(Lost)} $$

T=9 # target

# We consider a transition matrix

Matrix_target=np.zeros([T+6,T+6])

for i in range(T):

for j in range(i+1,i+7):

Matrix_target[i,j]=1/6

for l in range(T,T+6):

Matrix_target[l,l]=1

#print(np.round(Matrix_target,2))

# Transition matrix raised to the power T:

Power=np.linalg.matrix_power(Matrix_target,T)

# Test: should return 0.2803689

print('Test: should be equal to 0.2803689:')

print(Power[0,T])

n=30

XX=range(1,n+1)

plt.plot(XX,[WinningProbability(n,6) for n in XX],'o-')

plt.show()