Model 1: The frog (or how to become a trader?)¶

This is a math puzzle that used to be asked in job interviews for positions such as quantitative analysts or traders.

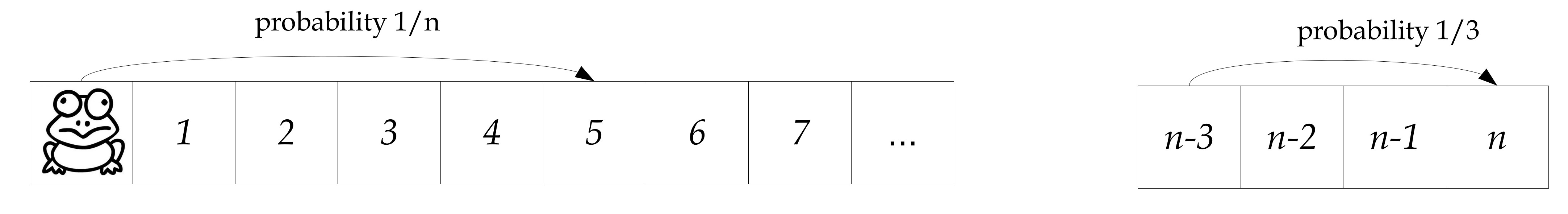

Here is the puzzle: a frog starts at the bank of a river located at $x=0$. The opposite bank is at $x=10$. The frog's first jump is chosen uniformly at random from ${1, 2, \dots, 10}$ (if it lands on $10$, the frog crosses the river and the process ends). Afterward, at each time step, if the frog is at some position $y<10$, it jumps to a uniform random location in ${y+1, \dots, 10}$.

(During the job interview, candidates were given access to a computer with a programming language.)